Tasks

GPT Chat Task (LLM Task)

This task manages all the messages and calling of LLMs to enable rich AI features in Stubber.

It can be used to pass in staring messages and get a response from an LLM assistant.

The task will use the default feedback action _update_from_chat_assistant to respond with the assistant's reply.

To see a list of supported LLM Models, see the LLM Models page.

Basic usage

Start a chat with the default model.

Note: This task has a wide variety of possible use cases, see the Examples section.

Failsafes

Due to the nature of AI chat assistants, there is a risk of infinitely repeating conversations when two auto-responding bots find themselves talking to one another. To mitigate this risk, two failsafe mechanisms are in place:

Message Count Failsafe

The stop_on_high_messages failsafe prevents a chat from exceeding 200 messages in a single conversation. This counts all message types (user, system, and assistant) within the current chat context.

This message count can be reset by using the message_operation: "set" parameter, which clears previous messages. The failsafe can be disabled by setting stop_on_high_messages: false, or adjusted to a different limit by setting a specific number.

Interaction Count Failsafe

The stop_on_high_interactions failsafe tracks how many times a specific chat (identified by chat_name) has interacted with a model, with a default limit of 1000 interactions.

Unlike the message count, this counter cannot be reset and persists even when messages are cleared. This provides protection against scenarios where a process might clear messages but still be caught in an infinite bot-to-bot conversation loop. The failsafe can be disabled by setting stop_on_high_interactions: false, or adjusted to a different limit by setting a specific number.

Both failsafe limits are enabled by default to prevent runaway processes.

Message Data Extraction

The task automatically extracts and processes certain structured data from the assistant's responses:

HTML-style Tags

When the assistant includes content within HTML-style tags (e.g., <thinking>my thought process</thinking>), the task will:

- Extract the content and add it to

message_data_extracted.{tag_name}in the response data - Remove the tagged content from the message

JSON Code Blocks

When the assistant includes JSON code blocks (enclosed in ```json), the task will:

- Parse the JSON content and add it to

message_data_extracted.jsonin the response data - Keep the JSON blocks in the original message

The extracted data is available in the task result under payload._gpt_chat_details.message_data_extracted. For example:

This feature can be disabled by setting the extract_data_from_message parameter to false, which may be necessary when working with responses that contain actual HTML content.

Parameters

messages optional array

An array of message objects.

The messages to submit for a chat completion.

The messages in the array should be sorted ascending chronologically, meaning that the message at the first index in the array should be the earliest message, and the last message in the array should be the latest.

Each chat has an array of messages that is automatically handled by the Stubber system. When there is a back and forth discussion between an assistant and a user, the messages are appended to this array, unless the message_operation is set, then the messages are overwritten by the messages of the current task.

Default: []

Show child attributes

content required string

The content of a specific message in the messages array.

This is the string value of the message text.

role required string

The role of a specific message in the messages array.

This can be one of system, user and assistant:

system: indicates a message to the system, and could be used to give instructions or rules to the assistant.user: messages from the user, this is the role that should be used for questions or statements that the user wants the assistant to respond on.assistant: the role for messages where the assistant responded.

Theseassistantmessages can also be included to simulate the assistant's responses.

Not all models support these roles in the same way.

Some models only allow a single system role. Some models require a user role to start the conversation.

It is important to know the requirements of the model you are using.

message_operation optional string

This can be either set or append. It defines the operation to use for the messages in the active chat (via gptchattaskuuid). The set operation erases the previous messages of the chat and sets the messages passed in this instance of the task as the messages for the chat completion. The append operation appends the messages of the current task to the existing messages in the chat.

Default: append

model optional string

The model that the task should use to do this specific instance of chat completion. If it is the first instance of a chat, the model will be saved for next instances in the same chat. This parameter is thus not required, but recommended for at least the initial chat to set the desired model.

See Models for a full list of supported models.

Default: [changes based on current models available]

submit_to_model optional boolean

Whether or not the task should actually submit the messages to the model/assistant for a response. This could be useful to set to false when a chat should be initiated by the assistant with a default message. Example:

Default: true

set_model optional boolean

Override the model in the specific chat with this model. This will change the model for future chat instances in cases

where the specific model is not specified. If a single task has model and set_model, the set_model model will be

used

Examples:

gpt-4o

Default: null

gptchattaskuuid optional array

The gptchattaskuuid is the globally unique reference used inside Stubber to keep track of the specific details of a chat, such as the model details, the previous messages, token usage etc. This is by default a deterministic uuid generated from the stub's stubref, such that each stub automatically has a unique chat. It is only required to specify this if you want fine grain control over which GPT tasks should use which chats across various stubs. If you want multiple different chats on a single stub, use chat_name instead.

Default: {{#deterministicuuid stub.stubref}}

chat_name optional array

The human readable name of the chat, such as "supervisor" or "contractor". Each chat has a unique id, gptchattaskuuid, that is used to enable tasks to keep appending messages and instructions to the same chat, continuously building on the chat context. This unique id is generated with a combination of the stubref and the chat_name. Chats happening on different stubs are thus automatically different. If you want to have different chats on the same stub, you have to give each chat its own chat_name, and then use this chat_name in all usages of the gpt_chat_task for that chat.

Default: ""

function_call optional string or object

This parameter determines whether functions will be added to the chat completion task. With this parameter set to auto, the assistant will automatically choose when to call a function, and which function to call. If the assistant calls a function and everything is set up correctly, as described here, the action with the same name as the function will be run on the stub of the chat.

Force an action

This parameter can be used to force a specific action to be run. This can be done by specifying the parameter as an object {"name": "get_weather"}. This example will force the assistant to call the get_weather action.

Prevent actions

To prevent the model from calling any actions, set this parameter to false.

Default: auto

You can use this parameter in conjunction with the disable_dynamic_tasks setting on the called action to do 1 shot requests from a model that always result in an action being run on the stub.

tool_choice optional string or object

This parameter determines whether tools will be used in the chat completion task when function_method is set to tools. With this parameter set to auto, the assistant will automatically choose when to use a tool, and which tool to use. If the assistant uses a tool and everything is set up correctly, the action with the same name as the tool will be run on the stub of the chat.

Force a specific tool

This parameter can be used to force a specific action to be run. This can be done by specifying the parameter as an object {"type": "function", "function": {"name": "get_weather"}}. This example will force the assistant to call the get_weather action.

Prevent tools

To prevent the model from using any tools, set this parameter to "none".

Default: auto

You can use this parameter in conjunction with the disable_dynamic_tasks setting on the called action to do 1 shot requests from a model that always result in an action being run on the stub.

functions optional array

An array of function objects to make available to the assistant. It is important to note that actions with the action_meta containing ai_function_calling: true, will be added to the functions the assistant has access to automatically.

This parameter should only rarely be required. Below is an example of a single function object:

Function objects that are generated automatically from actions have the same structure. The fields of the action becomes the properties, the help of each field becomes the description of the property. The name of the action becomes the name of the function. The description of the action becomes the description of the function.

Default: null

tools optional array

An array of tool objects to make available to the assistant. This parameter is used when the function_method is set to tools. It is important to note that actions with the action_meta containing ai_function_calling: true, will be added to the tools the assistant has access to automatically.

This parameter should only rarely be required. Below is an example of a single tool object:

Tool objects that are generated automatically from actions have the same structure as function objects. The fields of the action becomes the properties, the help of each field becomes the description of the property. The name of the action becomes the name of the function. The description of the action becomes the description of the function.

Default: null

temperature optional array

This is a parameter to change the consistency of the response of the assistant. Lower values, such as 0.15, result in more consistent responses. Higher values, such as 0.80, will generate more creative and diverse results.

The temperature is not supported by all models and models behave differently on different temperature settings. If the model does not support it, it will be ignored.

Default: null

reasoning_effort optional string

Controls the amount of reasoning tokens used by models that support reasoning. This parameter determines how much computational effort the model dedicates to thinking through the problem before responding.

Available levels:

"low": 1,024 reasoning tokens - faster, suitable for simpler tasks"medium": 8,192 reasoning tokens - balanced approach for most use cases"high": 24,576 reasoning tokens - maximum reasoning depth for complex problems

This parameter only works with models that support reasoning capabilities. It will be ignored by models that don't support this feature.

Default: null

include_attachments optional object

This parameter can be used to automatically add files and images to the messages of the chat. File examples can be images, videos, PDFs, and audio files. This is useful for LLMs that support multimodal interactions, such as images or other supported files, that are not part of the regular messages.

The parameter takes an object with two properties:

include_method: either"prepend"or"append"to control where attachments are addedattachments: an array of objects where each object needs to have afileuuidproperty

Use this to:

- add images to the LLM chat context

- add videos to the LLM chat context for models that support video (Like Gemini)

- add PDF files to the LLM chat context for models that support PDF files (Like Gemini, Claude)

- add audio files to the LLM chat context for models that support audio files

Multimodal models are a powerful way to enhance the interaction with the assistant, as they can understand and respond to images, videos, and other file types. Use this parameter to add these files to the chat context easily.

Parameter Examples:

Prepend the attachments of the current stub post:

Append files from the current stub:

Prepend a single file with a specific fileuuid:

When using "prepend", attachments will be inserted just before the last message in the messages array.

When using "append", attachments will be added at the end of the messages array.

Example of included file in the messages

Default: null

function_method optional string

The method to use for function calls, this can be either tools or functions. These two methods are not interchangeable in the same chat, if you use a specific function_method in a chat, you have to use the same method for all tasks in that chat.

The default function_method for all models released from May 2025 onwards is tools. The default function_method for all models released before May 2025 is functions.

Default: null

max_tokens optional integer

The maximum tokens that the assistant can respond with. In general this is not required, and should mainly be used if an abnormally short response is required.

Default: null

set_system_message optional string

The system message passed here will replace the system message in the existing chat. It will remain the system message for all subsequent chats in the same conversation. So it will not last just a single task instance, but will be the new system message until it is changed again.

Default: null

response_format optional object

This works for models that support this feature. For the assistant to respond in only json, this parameter has to be set to {"type": "json_object"}, and you have to mention the word "json" somewhere in the system message.

Default: null

extract_data_from_message optional boolean

Set this to false if you do not want the model to extract tags such as <thinking>...</thinking> or <span>...</span> as data points. This

is required if you attempt to force the model to respond in HTML, since all tags will be extracted as data points and be removed from the message.

Default: true

disable_model_response optional boolean

When this is set to true, the task will not publish the feedback action, by default _update_from_chat_assistant_task. The task can still execute actions, which can be disabled with disable_model_action_execution.

Default: false

disable_model_action_execution optional boolean

Controls whether the LLM model can execute Stubber actions. When set to true, the model will still see available functions/tools (actions marked with ai_function_calling: true in their action_meta), and can choose to call them, but Stubber will not convert these function calls into actual action executions.

This parameter is useful for testing function calling behavior without triggering real actions in the system, or if you want the model to provider specific structured data with a custom function.

Default: false

assistant_response_action_name optional string

The feedback action that the task will call with the result of the chat completion.

Default: _update_from_chat_gpt

set_assistant_response_action_name optional string

This will set the feedback action that the task will call with the result of the chat completion. This will be the feedback action for all future tasks in the chat.

Default: null

append_response_to_messages optional string

Each chat has an array of messages that is automatically handled by the Stubber system. When there is a back and forth discussion between an assistant and a user, the messages are appended to this array, unless the message_operation is set, then the messages are overwritten. When append_response_to_messages is false, the response of the assistant is not appended to the array of messages of the chat.

Default: true

stop_on_high_messages optional integer boolean

Controls the maximum number of messages allowed in a single chat conversation before the task is blocked. This count includes all message types (user, system, assistant) present in the chat at the time of execution.

This parameter serves as a failsafe mechanism to prevent infinite conversations, particularly in bot-to-bot interactions. The default limit is set to 200 messages, which can be:

- Increased by specifying a higher number

- Disabled by setting to

false(not recommended) - Reset by using

message_operation: "set"to clear previous messages

The default limit is intentionally conservative since model performance can degrade with very long message histories. It's recommended to periodically reset the conversation using message_operation: "set" rather than significantly increasing this limit.

Examples:

false- Disables the message limit check500- Sets a custom message limittrue- Uses the default limit (200)

Default: 200

stop_on_high_interactions optional string

Controls the maximum number of model interactions allowed for a specific chat (identified by chat_name) before the task is blocked. An interaction is counted each time the chat communicates with the model, regardless of the number of messages exchanged.

This parameter provides an additional failsafe layer that:

- Persists across message resets (unlike stop_on_high_messages)

- Cannot be reset by clearing messages

- Helps prevent infinite loops in automated conversations

The interaction counter:

- Increments with each model call

- Persists even when messages are cleared via

message_operation: "set" - Is specific to each unique chat

Examples:

false- Disables the interaction limit check500- Sets a custom interaction limittrue- Uses the default limit (1000)

Default: 1000

action_call_result_method optional string

By default, when the assistant decides to run an action, a dynamic task is added to that action to return the result of the action back to the assistant, allowing the assistant to respond on the result of the action it initiated. A custom dynamic task can be passed instead of the default one by setting this parameter to custom.

Default: null

dynamic_tasks optional array

The tasks to dynamically add to an action that the assistant has chosen to run. The default task that is added, return_gpt_function_data, returns the result of the action, the stubpost, to the assistant. The assistant will then respond normally with the passed feedback action, by default _update_from_chat_assistant_task.

This parameter is only used if action_call_result_method is set to custom. If it is specified as custom, only the tasks specified in dynamic_tasks will be added to the action.

Default:

Result

Properties

response

The response as it was received from Open AI.

response.id

The unique identifier for the chat completion in Open AI's system. This is not used in Stubber.

response.object

The type of the response object, this should always be chat.completion.

response.created

The timestamp at which the response was created in Open AI's system.

response.model

The exact model that was used for the chat completion. This property could be of some significance, since it is not always exactly the model that is passed as a parameter. This is the case here. No model was passed in the Basic Usage section, so the default gpt4o-mini was used, yet the value in the response is gpt4o-mini.

response.choices

The choices object is the property that contains the message with some additional information. It should very rarely be necessary to use this property. Since the message is the desired result from this task, we include it in the top level of the response for convenience.

response.usage

The details of the token usage can be found in this property.

prompt_tokens: the amount of tokens that the prompt, ie. the messages, used.completion_tokens: the amount of tokens in the completion, ie. the response of the assistant.total_tokens: a sum of theprompt_tokensand thecompletion_tokens.

response.system_fingerprint

This is a unique identifier for Open AI for the system that was responsible for handling the request.

response.message

This is the response of the assistant on the prompt or messages that was provided for the task. This message will also be passed as the message of the feedback action, _update_from_chat_assistant_task.

_gpt_chat_details.message_data_extracted

When message_data_extraction is enabled, this object contains any data that was extracted from HTML-style tags or JSON code blocks in the assistant's response. The data is organized by tag name or "json" for JSON blocks. If there are multiple JSON blocks, the data will be numbered and the key will be json, json_2, etc.

For example:

Action Meta

All Stubber actions have the action_meta property. For gpt_chat_task, this property can be used to make actions

available to AI assistants for calling. It can also be used to change the behavior when only specific actions are run

by the AI assistant.

Here is an example action_meta object for a get_weather function. The initial ai_function_calling: true is what

makes the action available for calling by AI assistants. Additional parameters are then all nested inside of

ai_details.

See Action Meta for more information on the action_meta property of actions.

Examples

In these examples, the wider picture of what happens will be shown, as the most important part of the task result is the assistant's response message, and that is fairly simple to understand. Which parameters to use in which scenario is the secret sauce of this task.

Greet new user with the weather

We have an action called greet_new_users with the gpt_chat_task definition below.

The task definition:

The assistant calls a Stubber action get_current_weather to get the weather in a location, and then generates a welcome message which includes the weather. This requires an action, get_current_weather, with the action_meta field ai_function_calling set to true and a text field with the name location available on the stub in the correct state.

For our get_current_weather action, we added a task with an API call to api.weatherapi.com. This task is as below:

We added an api key for api.weather.com in our stub data. As for the {{stubpost.data.location}}, when the assistant decides to call a function, the properties that the assistant uses to call the function are added to the stubpost.data of the action that the Stubber system runs on behalf of the assistant. So the stubpost.data.location value will come from the assistant.

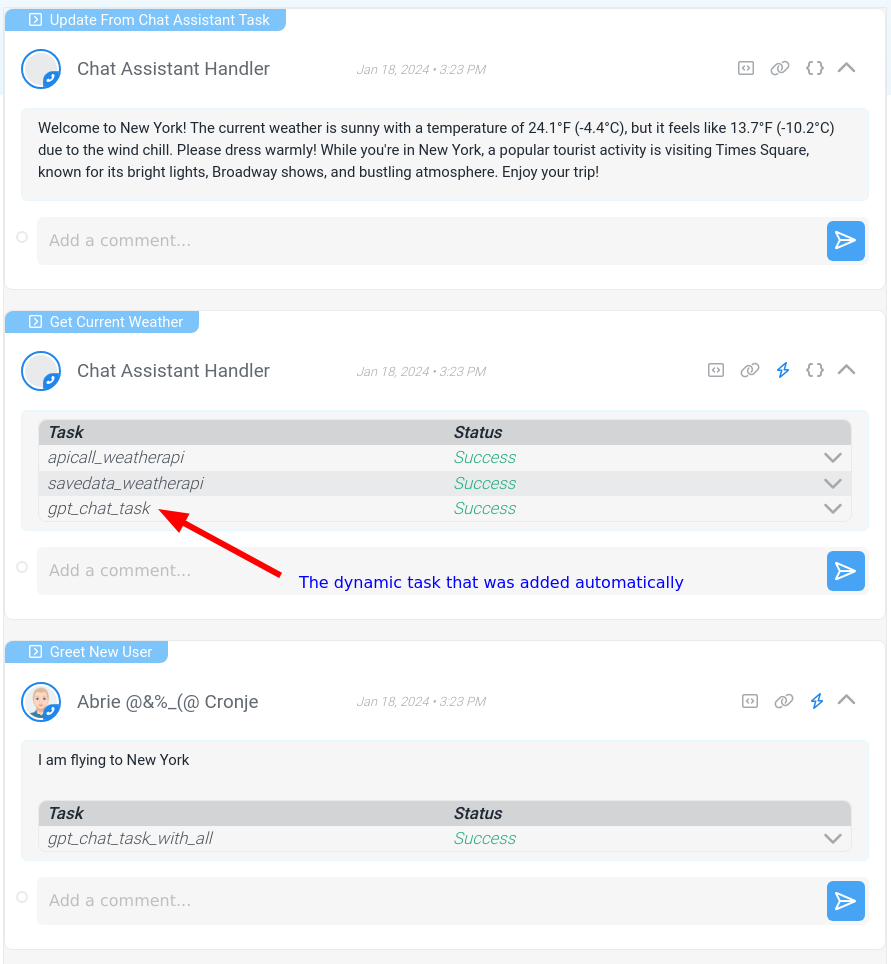

Result

Note that in the order of things happening below, the disable_model_response: true parameter ensures that there would not be an additional assistant response between steps 2 and 3. Sometimes the assistant informs the user that it is going to call a function before actually calling the function.

The order of things happening in this example is as follows:

- We run the

greet_new_usersaction with the message "I am flying to New York". - The action runs the

gpt_chat_taskdefined above, calling the assistant. - The assistant responds that the action

get_current_weather, with the location parameter set to "New York", should be ran. The dynamic task that will return the result of the action to the assistant is added to theget_current_weatheraction request. - The Stubber system automatically runs the

get_current_weatheraction, and thus the task that makes an api call toapi.weather.com. - The dynamic task returns the entire

stubpostof theget_current_weatheraction as a appended message to the assistant. - The assistant generates a greeting response as instructed in the system message, this response is added to the stub via the task feedback action,

_update_from_chat_assistant_task.

Here is a screenshot of the flow in Stubber:

Force the model to respond in Json only

This is only available for select models. You have to mention the word "json" in the system message, or the task will error.

The task definition:

Result

Here follows the result with the stubpost message as "Create a recommended people structure for my company, "The Pink Factory". We have technical, operations, support and sales teams with 5, 5, 10, 10 members in each respectively."