Using GPT

GPT Tips and Tricks (LLM)

Some tips and tricks for working with LLMs

Prompting Tips

See more detailed information on prompting.

General

To get optimal results from an LLM (Large Language Model), it's crucial to provide the right input. Here are some tips to help you achieve the best results:

- Provide clear and concise instructions.

- Offer examples to illustrate your point.

- Specify a persona or context to frame the output.

- Outline steps, procedures, and thought processes so the LLM can articulate its reasoning.

As for additions, you could include:

- Set boundaries or constraints if certain outcomes or answers are not desirable.

- Clarify the desired tone or style, whether formal, casual, creative, etc.

- Iterate and refine the prompts based on the output to ensure you get closer to the intended response.

Clear and Concise Instructions

When giving instructions to the LLM, it is important to be clear and concise. This means that you should avoid using ambiguous language and be as specific as possible.

- Try using different verbs or adjectives to get the LLM to output different results.

- Try shortening the prompt to get the LLM to output more concise results.

- Try splitting the prompt into multiple parts to get the LLM to output more detailed results.

Give Examples

When giving instructions to the LLM, it can be helpful to provide examples.

Providing examples is one of the surest ways to get the LLM to output the desired results.

When giving examples you could do it in two different ways :

- System prompt then role play

- Examples in System prompt

System prompt then role play

In this method of giving examples you explain the full instructions and then ask the LLM to role play the scenario.

You then add in "assistant" and "users" messages in the chat log to give the LLM an example of a good conversation.

Then you tell the LLM to stop the role play and start interacting with live users.

Examples in System prompt

In this method of giving examples you give the LLM examples inside of text blocks in the system prompt.

Here is a recommended format (32 equal signs are 1 token) GPT Tokenizer:

Give a Persona and Context

When giving instructions to the LLM, it can be helpful to provide a persona and context.

For persona this could mean :

- give it a name

- tell it it's an expert

- years of experience

- projects completed

- places studied

For context this could mean :

- which company it works for

- where the company is situated

- the types of clients

- the general industry

- products and services

Give Procedures, Steps and Ways of Thinking

When giving instructions to the LLM, it can be helpful to provide procedures, steps and ways of thinking. This can help the LLM understand how to approach the task and what steps to take.

This could take the form of :

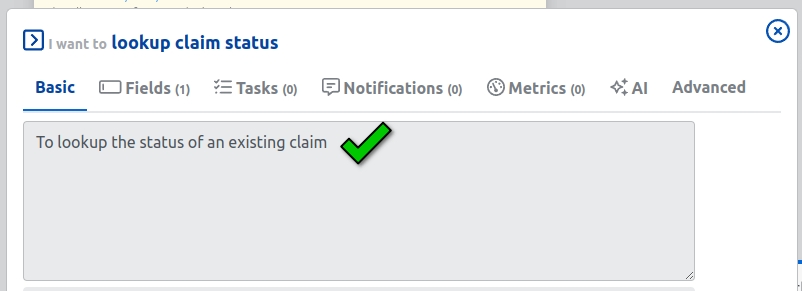

Action descriptions

To get the best performance when describing your actions follow these guidelines:

Provide extremely detailed descriptions. This is by far the most important factor in performance. Your descriptions should explain every detail about the action, including:

- What the action does

- When it should be used (and when it shouldn't)

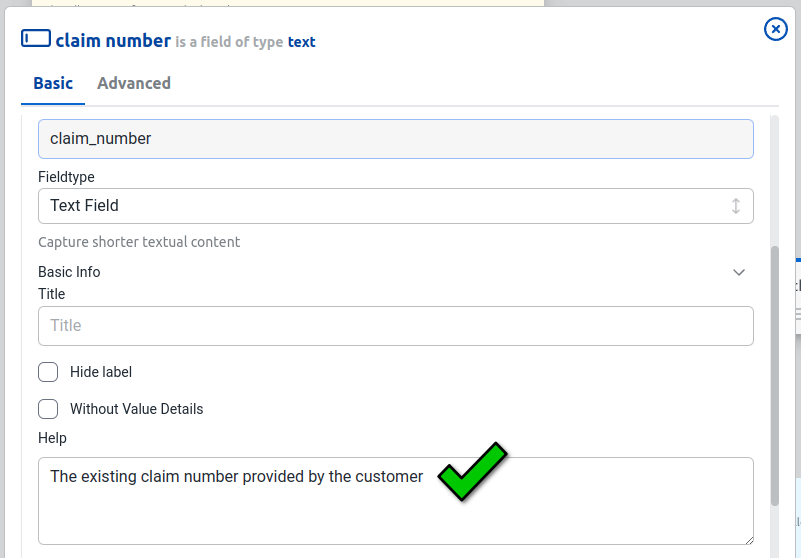

- What each field means and how it affects the action's behavior (use the help on the field settings)

- Any important caveats or limitations, such as what information the action does not return if the action name is unclear

The more context you can give about your actions, the better the AI will be at deciding when and how to use them. Aim for at least 3-4 sentences per action description, more if the action is complex.

Prioritize descriptions over examples. While you can include examples of how to use a action in its description or in a prompt, this is less important than having a clear and comprehensive explanation of the action's purpose. Only add examples after you've fully fleshed out the action description.

Hallucinations

To minimize and handle AI hallucinations effectively, it's essential to focus on both prevention and detection. Preventing hallucinations is the primary strategy, as it reduces the chances of errors occurring in the first place. However, robust detection mechanisms play a key role in identifying any hallucinations that do arise and allow for prompt correction or recovery.

By combining preventative measures and detection tools, you can ensure AI outputs remain reliable, accurate, and trustworthy.

Avoiding Hallucinations

A good checklist to follow to avoid hallucinations is :

- The prompts are broken up into clear sections

- Each Stubber AI action has a clear description of when to use it

- Important fields on Stubber AI actions have a good help text

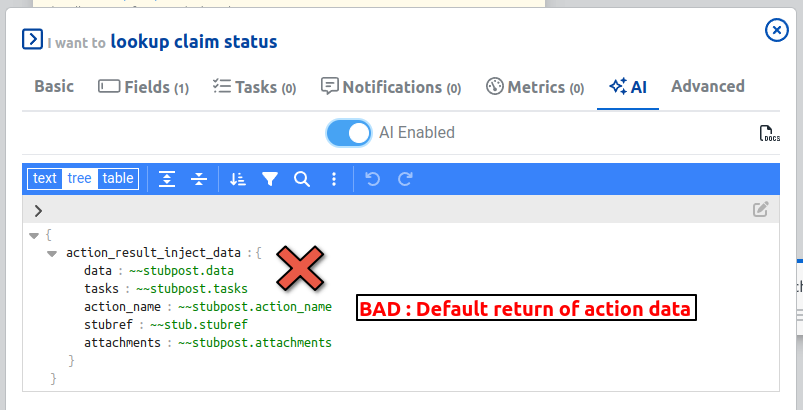

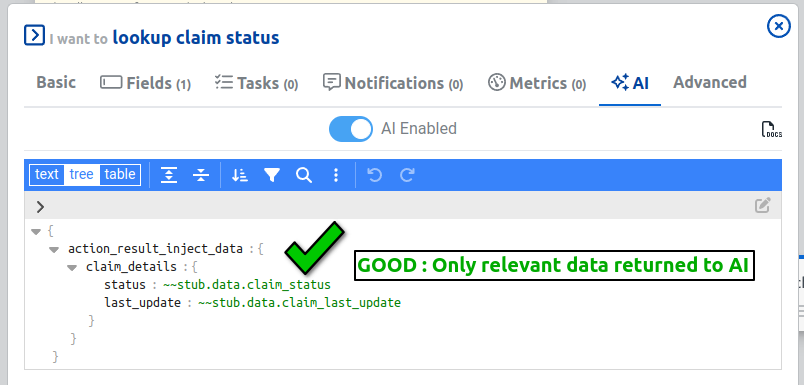

- IMPORTANT : All Stubber AI Actions should have very clean return data (no AI actions should have the default return data)

Detecting Hallucinations

There is a set of 3 tasks that can be used in conjunction to detect hallucinations:

Retrieve Chat Log: This task collects the chat log, which captures all interactions between the user and the AI. This log serves as the primary record for evaluation.

Contextual Cross-Check with Another LLM: In this step, a different language model (LLM) reviews the chat log for potential inaccuracies. For the second LLM to perform this effectively, it requires predefined examples or context about what constitutes a "good" conversation or outcome (accurate responses, logical flow, factual consistency) and what a "bad" conversation looks like (misinformation, logical errors, or hallucinations). These examples help guide the LLM in recognizing discrepancies.

Parse Output for Verification: The final task parses the evaluation from the second LLM and generates a boolean value (true or false), indicating whether the original AI response was accurate (true) or if hallucinations were detected (false).